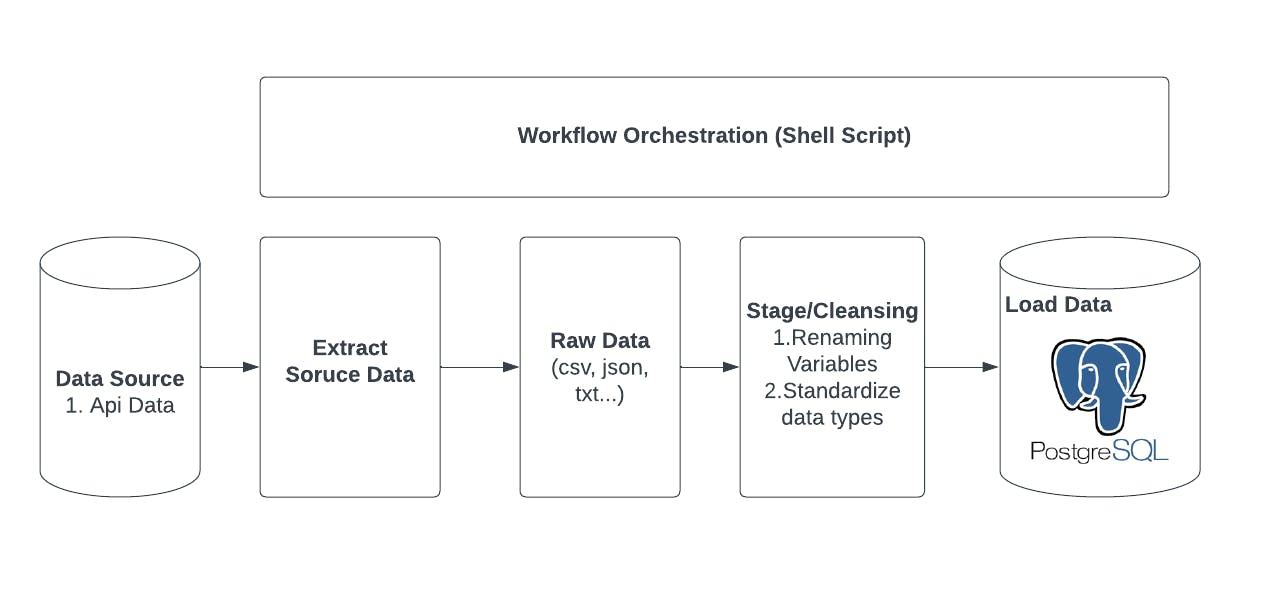

Extract, Transform, and Load (ETL) processes are used when flexibility, speed, and scalability of data are crucial in an organization. It is used to automate the process of curating data from multiple sources, transforming it into a unified structure and then loading it into its new environment (data warehouse or database). In this article you will learn how to.

- Extract data from an Application programming interface (API) data

- Transform the extracted data to a CSV file.

- Load your CSV file to your postgreSQL database.

Prerequisite

- Basic knowledge of shell scripting.

- Basic knowledge of SQL

- Postgres command line (CLI) installed on your machine.

Extracting data from Data source

The data required for extraction can be found in the free coinstat API. The only pieces of data needed for this article are the crypto currency symbol and the price, which you would need to extract using the curl linux command. The curl linux command is used to scrape data from a website data source. Getting the data from a particular data source won't be enough to get the required information you need to extract from the API, so you will pipe ( | ) the grep linux command to get the exact information you need from the API as shown below.

| - stands for pipe (It takes a particular output from a command and uses the output to make decisions with the command after it to produce a new result.)

|\ - means a new line

The >> command copies the extracted data content into a txt file.

#Extract phase

echo "Extracting Data"

# Extract the columns 1 (symbol), 2 (price) from

# (The api source)- coinstats

curl -s --location --request GET https://api.coinstats.app/public/v1/coins/bitcoin\?currency\=USD |\

grep -oE "\"symbol\""|\

tr -d '" ' >> symbol.txt

curl -s --location --request GET https://api.coinstats.app/public/v1/coins/ |\

grep -oE "\"symbol\":\s*\"[A-Z]*\"" |\

grep -oE "\"[A-Z]*\"" |\

tr -d '" ' >> symbol.txt

curl -s --location --request GET https://api.coinstats.app/public/v1/coins/bitcoin\?currency\=USD |\

grep -oE "\"price\""|\

tr -d '" ' >> price.txt

curl -s --location --request GET https://api.coinstats.app/public/v1/coins/ |\

grep -oE "\"price\":\s*[0-9]*?\.[0-9]*" |\

grep -oE "[0-9]*?\.[0-9]*" >> price.txt

Transforming your data

The transformation phase involves wrangling your data to ensure it adheres to best data structuring practices that is suitable or useful to the end users of the data. This phase ensures that your data is well suited for the new environment (data warehouse) it will be loaded into. Now you have gotten the required data you need, you will need to convert it into a CSV file format suited for your data warehouse using the following code below.

#Transform phase

echo "Tranforming Data"

# read the extracted data, merge both files together and replace the colons with commas.

paste -d "," symbol.txt price.txt >> cryptoprice.csv

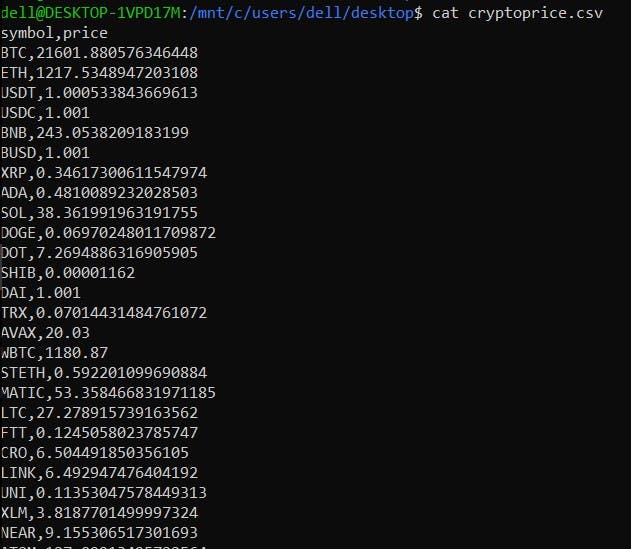

The paste in the illustration above joins the symbol.txt file to the price.txt file and creates a new file called cryptoprice.csv. You will also notice that -d "," is included in the code above, which tells our program to include a comma after every symbol in the new file created, as shown in the image below.

Loading your CSV file into your PostgreSQL database

In the loading phase, which is the last phase in the ETL process, you will need to ensure you have created your database and table with the right data types assigned to each attribute, symbol and price, which are used for this article. You will need to create your database and tables using the code as shown below.

This will serve as the pre-loading phase before loading into your database.

#pre-Loading phase

echo "Loading Data"

#connecting to Postgres CLI (command line)

psql --username=postgres --host=localhost

#Creating a Database cyrptoprice

CREATE DATABASE cryptoprice;

#connecting to database cryptoprice

\c cryptoprice;

#Creating table prices

create table prices(symbol varchar(7),price varchar(23));

#To Check if table (prices) was created

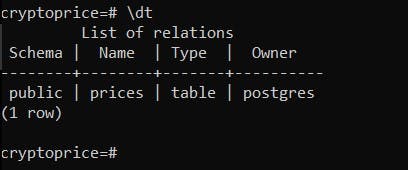

\dt

Once you have been able to create your table, you should be able to view it in your database using the \dt command as shown above. You should get something like this.

Now that you have created your table (prices), you can now begin the loading phase. First of all, you will need to quit the PostgreSQL command line using the \q command. This will take you back to your linux terminal. You can now insert your data from your cryptoprice.csv file into your database using the code as shown below.

# Load phase

echo "Loading data"

# Send the instructions to connect to 'cryptoprice database' and

# copy the file to the table 'Prices' through command pipeline.

#\c - to connect to your database.

#\COPY prices FROM 'cryptoprice.csv' DELIMITERS ',' CSV HEADER;- to copy your CSV file from your file directory to your table prices in your database.

echo "\c cryptoprice;\COPY prices FROM 'cryptoprice.csv' DELIMITERS ',' CSV HEADER;" | psql --username=postgres --host=localhost

This should give you an output of 97 rows inserted into your database table. If not, cross check your data types and ensure your spellings are correct.

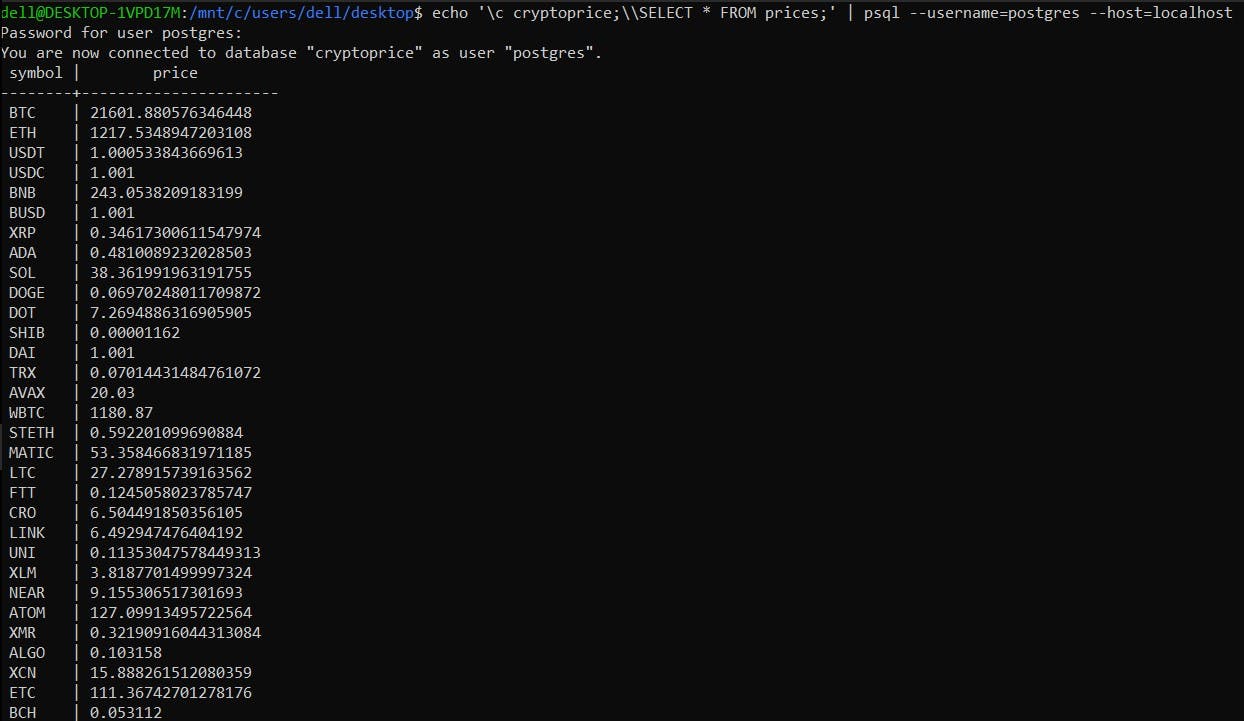

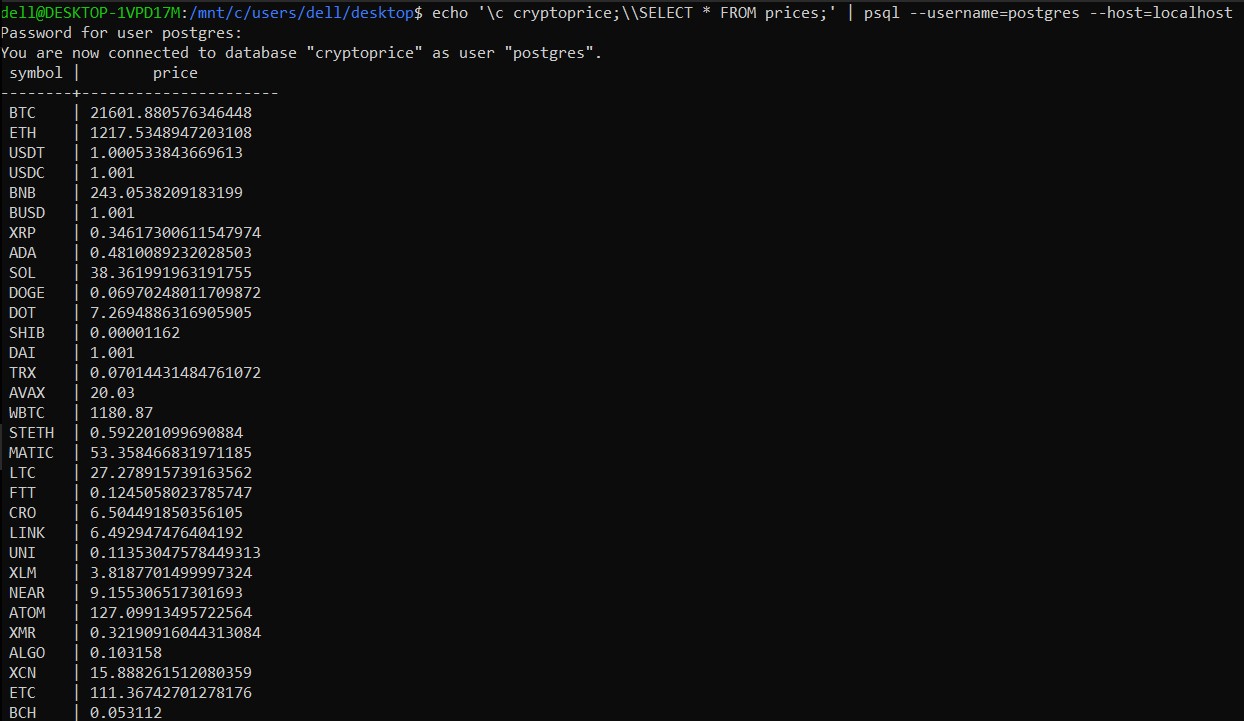

To verify that the table was populated with your CSV data, you can check using the code below.

# list out the output of the table prices

echo '\c cryptoprice;\\SELECT * FROM prices;' | psql --username=postgres --host=localhost

Your result should look like this.

Now that you have been able to load your CSV file into your database, you will need to save all your ETL commands as a bash file, which can be used to automate the whole ETL process when executed. You can get a copy of the bash file from my github repo here.

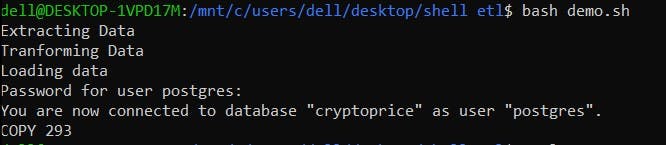

Run the finished script.

Execute the script.

bash demo.sh

Run the command shown below to ensure that the data is present in your cryptoprice database.

echo '\c cryptoprice; \\SELECT * from prices;' | psql --username=postgres --host=localhost

Your output should look like the example below, showing the data from the table (prices) in your postgreSQL database.

Congratulations! Using shell scripting, you have written an ETL script.

Conclusion

This article has taught you how to run an ETL pipeline using shell scripting and load your CSV data into your PostgreSQL database. Alongside, you have learnt how to extract data from an API using the curl linux command.

If you have any questions, don't hesitate to contact me on twitter :).